A compilation of the best podcast clips on ML engineering (PLUS: Lessons learned from 45 years in software, OpenAI's recent breakthroughs, and more!)

I hope you've had a good start to 2021! By way of holiday travel, annual review, and additional content production, it's been more hectic for me than I would prefer, but I'm starting to get back in a rhythm of good habits.

Onto the newsletter! In this week's edition:

- A compilation episode of the best podcast clips on ML engineering

- Lessons Learned after 45 Years in Software

- OpenAI's Multi-Modal Breakthroughs

- A Cambrian explosion of Vision Transformer research

- Machine Learning Is Going Real-Time

Best of ML Engineered in 2020 Part 1 - ML Engineering

I originally intended for the 2020 podcast compilation to be only one episode, but after looking through all the clips I wanted to include, I decided to break it up into two by topic: one this week on ML engineering, and one next week on ML research and careers.

Featured in this episode:

- Josh Tobin discusses why putting machine learning into production is so hard and what the future of ML systems looks like.

- Shreya Shankar presents her checklist for building production ML models and talks about unexpectedly useful skills for ML engineers.

- Luigi Patruno details how ML teams can be more rigorous in their engineering and the importance of approaching your work from the business perspective.

- Andreas Jansson shares what he's learned from talking to data scientists and MLEs about their tools and workflows and what the future of that might be with his new project Replicate.

Click here to listen to the episode, or find it in your podcast player of choice: https://www.mlengineered.com/listen

Lessons Learned from a 45-Year Software Career

It’s quite amazing that the entire software industry is young enough that many of its early pioneers are just starting to retire. A blog post was shared with me recently by a past guest, swyx, with some deceptively simple lessons from someone who’s been in the industry for over four decades.

Check it out here and also see the HackerNews comments for additional insight.

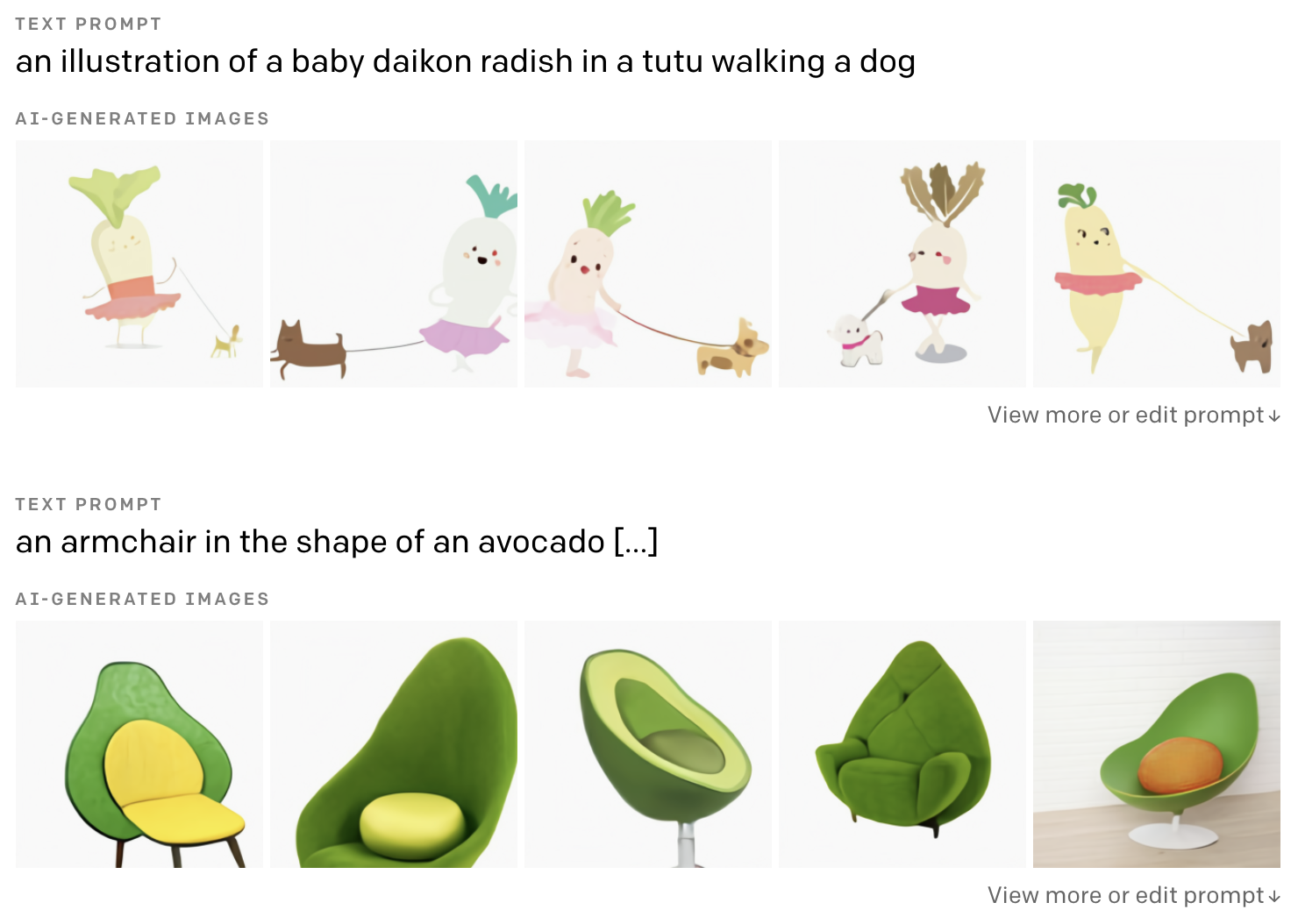

OpenAI's Ridiculous Multi-Modal Breakthroughs

On Tuesday, Open AI dropped two incredible blog posts detailing their recent work on extending GPT-3 for multi-modal learning. This is the type of stuff that makes me extremely excited to be working in this field:

|

Vision Transformers continued

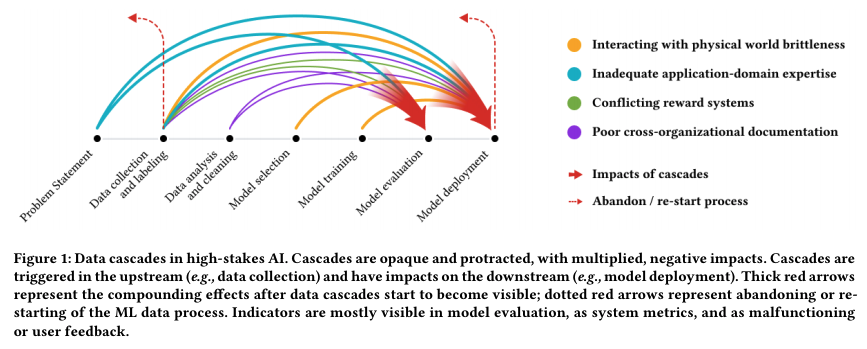

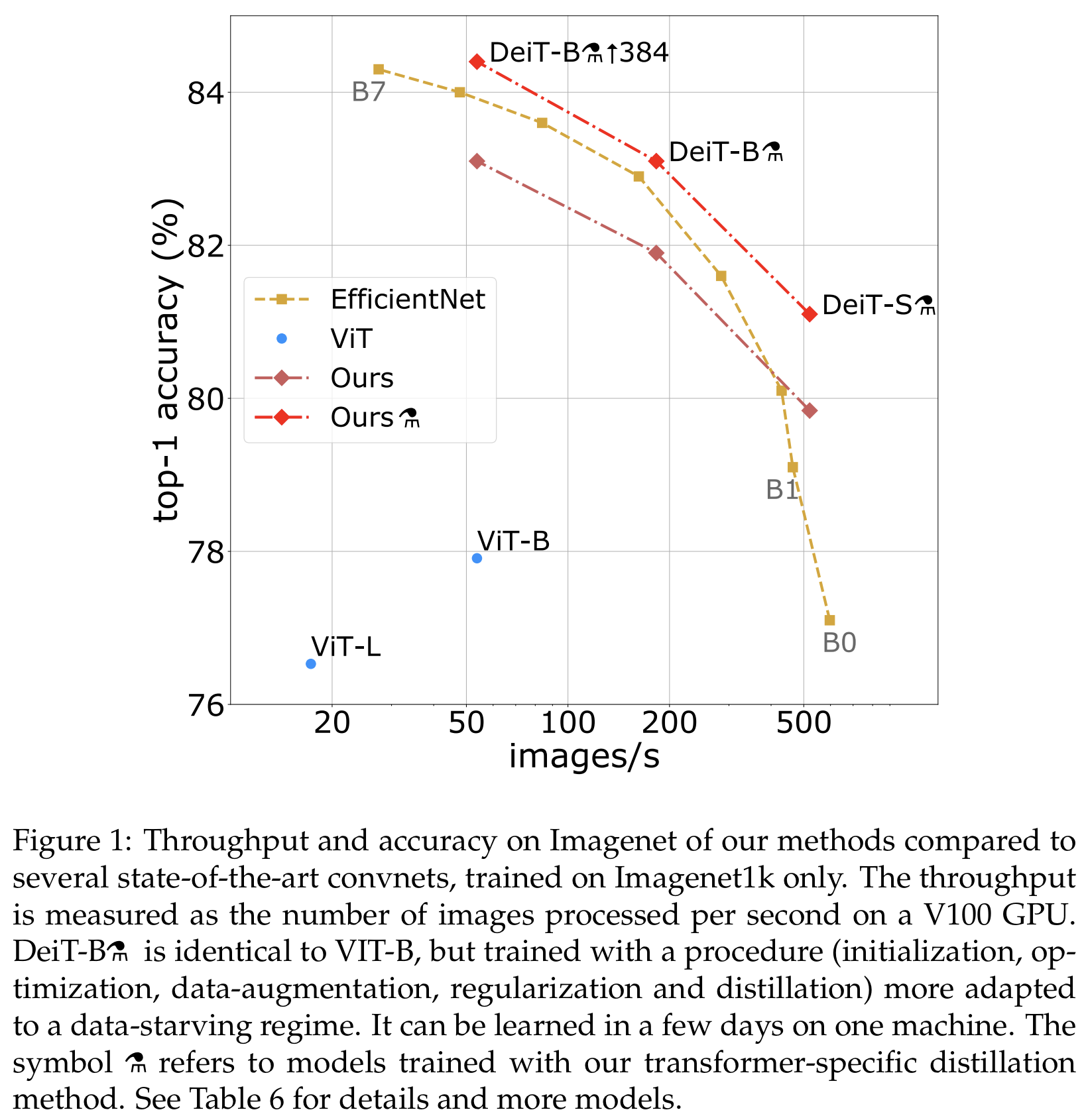

A few months back, Google made a splash in the research scene with the release of their Vision Transformers paper (which I covered in this edition of the newsletter). Since then, we’ve seen a Cambrian explosion of follow-on research, including OpenAI’s multi-modal work above and Facebook’s DeiT, a more data-efficient ViT with a new transformer-specific knowledge distillation technique (see their results on Imagenet below).

|

There’s also been research into interpreting and explaining what’s going on behind the scenes of these models.

Jacob Gildenblat has an excellent blog post showing various techniques for explainability applied to one of Facebook’s released models. Check it out here.

|

If you want to dive deeper into this, Facebook also has a recent paper introducing additional techniques for transformers: Transformer Interpretability Beyond Attention Visualization

And to get a broader overview of all the research that’s been done on this topic, a survey on ViTs was recently published: Transformers in Vision: A Survey

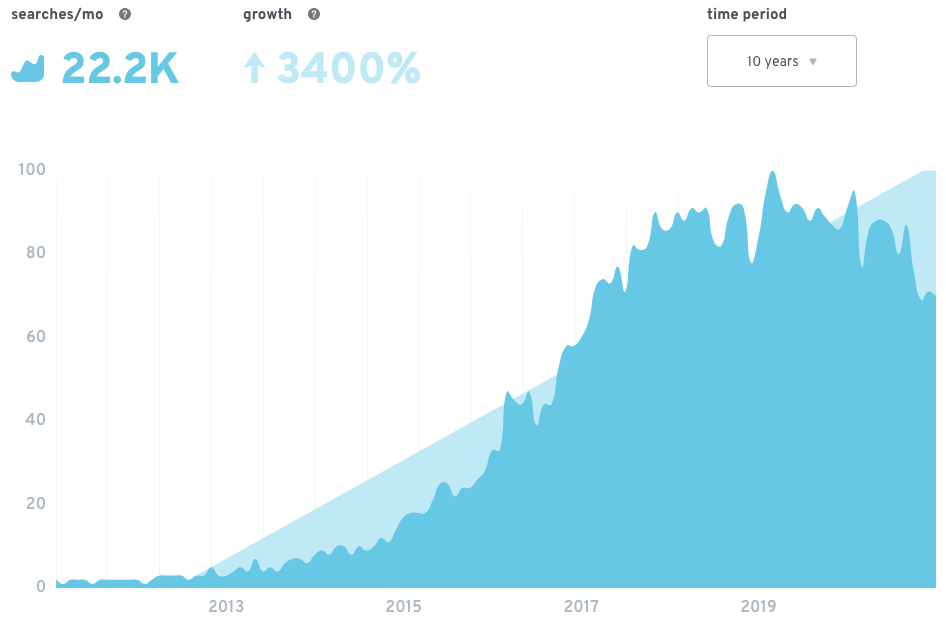

Machine Learning Is Going Real-Time

Chip Huyen is one of the best bloggers on ML engineering today and she recently published an article detailing her thoughts on the trend of more ML systems needing to make online predictions and what’s needed to accomplish that. I especially enjoyed the latter section on how companies handle model testing after online training.

Check it out here: Machine Learning is Going Real-Time

Machine Learning Engineered

I mentioned last week that I had to skip an episode release because of guest reschedulings. Since then, I’ve recorded five episodes, which was fun but extremely tiring. Rest assured, I won’t be missing another release! Also, in case you missed it, I wrote up a study guide for aspiring ML engineers that lays out a clear starting path and contains a list of resources that I and my friends have learned from. Read the Study Guide In this week's edition: My Interview on the MLOps Community podcast...

There’s no podcast episode this week due to an unfortunate coincidence of multiple guests needing to reschedule. My apologies, I’ll be doing my best in the future to not let this happen again. That doesn’t mean I don’t have any new content for this week, though! Today I’m releasing an article that answers one of the most common questions I get: “I want to learn machine learning, where do I start / what do I do?” When I was first getting started in ML, it was pretty straightforward: there was...

After a month off from releasing original interviews on the podcast feed, I’m so excited to be sharing this episode with all of you! Aether Biomachines is one of the most interesting machine learning startups I’ve ever come across and I was thrilled to interview the founder, Pavle Jeremic. Building a Post-Scarcity Future using Machine Learning “How can we make sure that the economy is so productive that the desperation that leads people to commit atrocities never happens?” In this episode,...